T3

Data Quality

Cleaning and manipulating data may be considered as the:

- Most Time-Consuming task

- Least Enjoyable task (by some!)

Problems:

- Missing Values: nformation that is not available because it wasn’t collected or because it consisted of sensitive information; features that are not applicable in all cases

- Duplicated Records: Same (or similar) data collected from different sources

- Noise: Modifications to the original records (data that is corrupted or distorted) due to technological limitations, sensor error or even human error

- Outliers: A data point that differs significantly from other observations

Data Exploration

What to do?

- Understand the data and its characteristics

- Evaluate its quality

- Find patterns and relevant information

How?

- Central Tendency: average, mode, median…

- Statistical dispersion: variance, standard deviation, interquartile range…

- Probability distribution: Gaussian, Uniform, Exponential…

- Correlation/Dependence: between pairs of features, with the dependent feature…

- Data visualization: tables, charts, boxplots, scatter plots, histograms, …

- Do the values of one categorical variable depend on the value of other categorical variables?

- This test is also known as the chi-square test of association.

- Do we want to keep highly-correlated features?

- Both positive and negatively correlated ones?

- What about the correlation between the dependent and the independent features?

- Independent variables (X) : input features/input vector

- Dependent Variable (y) : target/class/label

Data Visualization

Graphs!!!

- Histplots

- Boxplots

- Trendlines

Data Preparation

Basics

A set of basic data preparation techniques can be used:

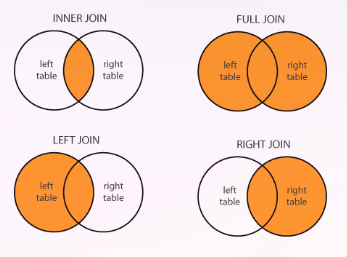

- Union/intersection of columns;

- Concatenation;

- Sorters;

- Filters (column, row, nominal, rule-based, …);

- Basic aggregations (counts, unique, mean/sum, …)

Advanced

How?

- Feature scaling

- Outlier detection

- Feature selection

- Missing Values treatment

- Nominal value discretization

- Binning

- Feature Engineering

Data Preparation: Feature Scaling

Many classifiers use distance metrics (ex.: Euclidean distance) and, if one feature has a broad range of values, the distance will be governed by this particular feature. Hence, the range should be normalized so that each feature may contribute proportionately to the final distance.

Normalization

Reescaling data so that all values fall within the range of 0 and 1, for example.

Standardization / Z-score normalization

Rescaling the distribution of values so that the mean of observed values is 0 and the standard deviation is 1. Assumes observations fit a Gaussian distribution with a well-behaved mean and standard deviation, which may not always be the case.

Data Preparation: Outlier Detection

- Statistical-based strategy: Z-Score, Box Plots, …

- Knowledge-based strategy: Based on domain knowledge. For example, exclude everyone with a monthly salary higher than 1M € …

- Model-based strategy: Using models such as one-class SVMs, isolation forests, clustering, …

To keep the dataset size, we may want to cap outliers instead of dropping them. However, it can affect the distribution of data!

Data Preparation: Feature Selection / Dimensionality Reduction

Which features should we use to create a predictive model? Select a sub-set of the most important features to reduce dimensionality.

- May affect significantly the performance of a model

- Reduces overfitting (less opportunity to make decisions based on noise)

- Improves accuracy

- Helps reducing the complexity of a model (reduces training time)

What can we remove:

- Redundant features (duplicate)

- Irrelevant and unneeded features (non-useful)

Process:

- Remove a feature if the percentage of missing values is higher than a threshold;

- Use the chi-square test to measure the degree of dependency between a feature and the target class;

- Remove feature if data are highly skewed;

- Remove feature if low standard deviation;

- Remove features that are highly correlated between each other.

a technique to reduce the dimension of the feature space. The goal is to reduce the number of features without losing too much information. A popular application of PCA is for visualizing higher dimensional data.

Use a ML algorithm to select the most important features! Select a set of features as a search problem, prepare different combinations, evaluate and compare them! Measure the “usefulness” of features based on the classifier performance.

Algorithms that already have built-in feature selection methods. Lasso, for example, has their own feature selection methods. For example, if a feature’s weight is zero than it has no importance! Regularization - constrain/regularize or shrink the coefficient estimates towards zero.

Data Preparation: Missing Values

Options on how to deal with it:

- Remove

- Mean

- Interpolation

- Mask

- ....

Data Preparation: Nominal Value Discretization

Categorical data often called nominal data, are variables that contain label values rather than numeric ones. Several methods may be applied:

- one-hot encoding

- label encoding - Integer values have a natural ordered relationship between each other. ML models may be able to understand such relationship.

- binary encoding - Categorical features where no such ordinal relationship exists. However, for a huge number of categories it is not so good...

Data Preparation: Binning / Discretization

Make the model more robust and prevent overfitting. However, it penalizes the model’s performance since every time you bin something, you sacrifice information.

Data Preparation: Feature Engineering

The process of creating new features! The goal is to improve the performance of ML models.

Example: from the creation date of an observation (2021-10-29 16h30) what can we extract?

We may extract new features such as:

- Year, month and day

- Hour and minutes

- Day of week (Thursday)

- Is Weekend? (No)

- Is Holiday? (No)